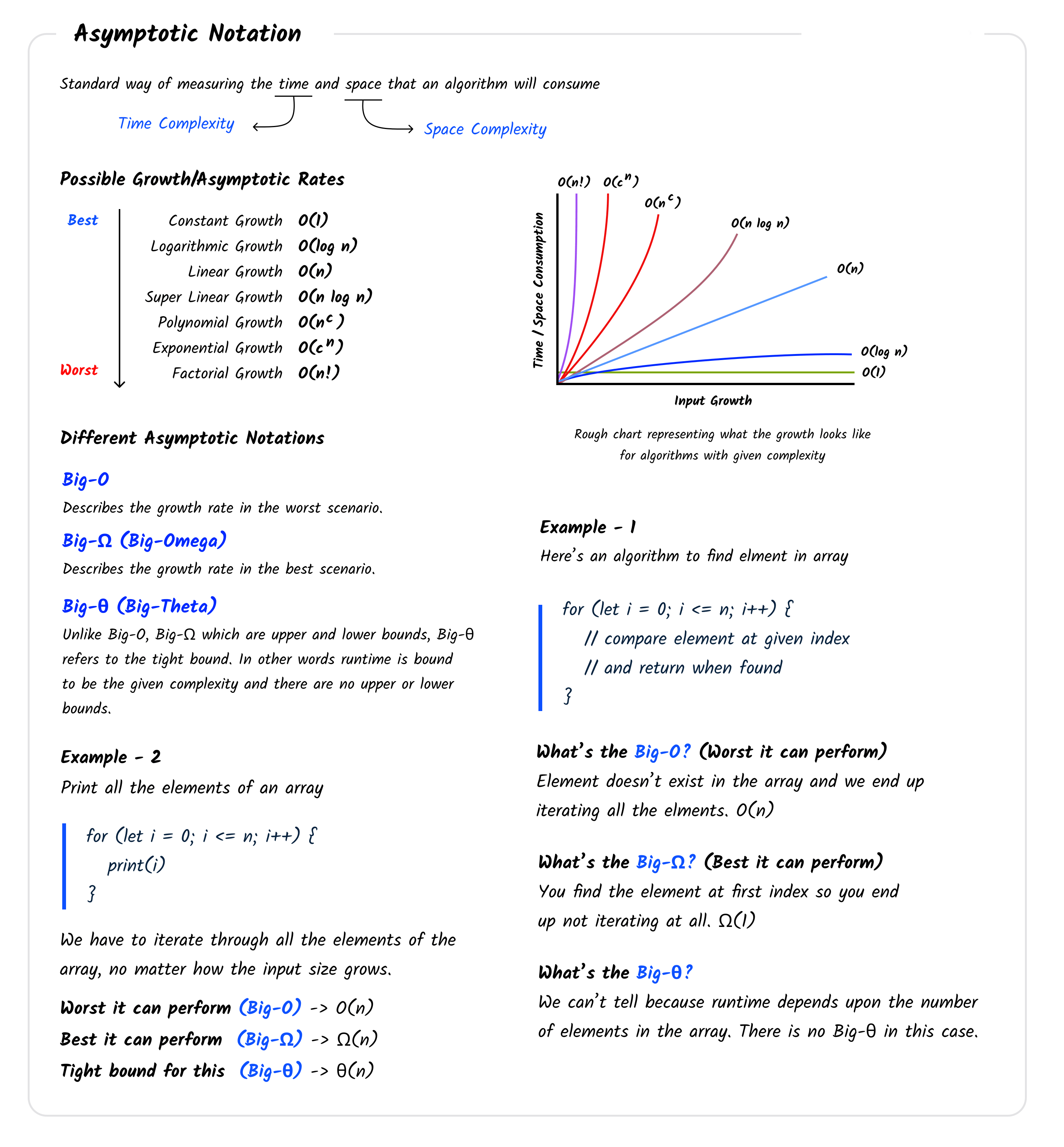

Asymptotic notation is the standard way of measuring the time and space that an algorithm will consume as the input grows. In one of my last guides, I covered “Big-O notation” and a lot of you asked for a similar one for Asymptotic notation. You can find the previous guide here.

visual Guide  by Jean Michel Eid

by Jean Michel Eid

by Jean Michel Eid

by Jean Michel EidAsymptotic Notation

Learn the basics of measuring the time and space complexity of algorithms